TL;DR

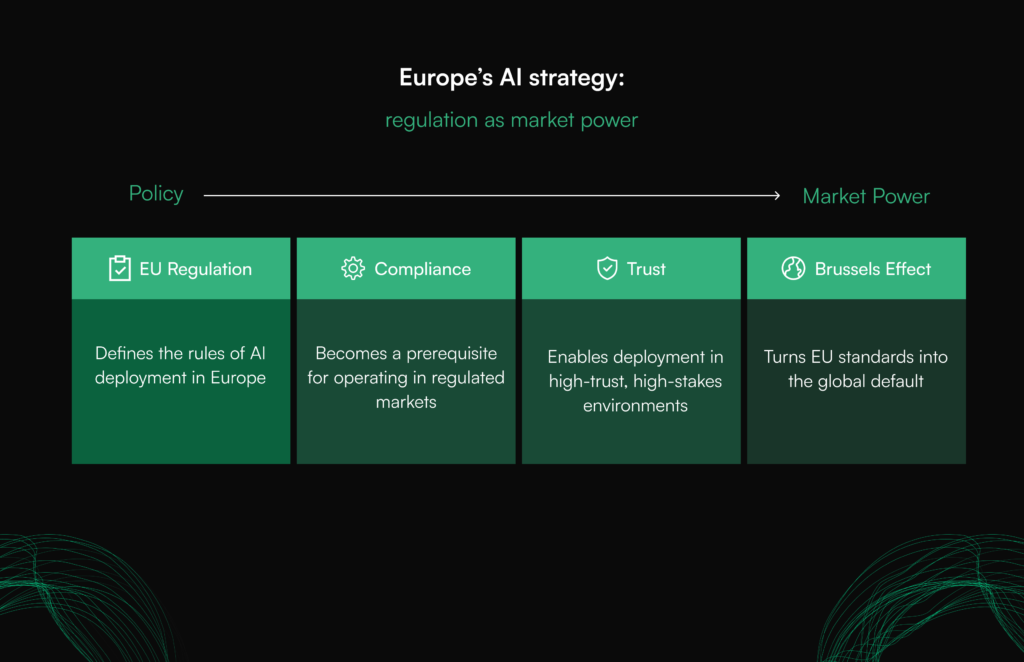

Europe isn’t racing to win the AI scale war, it’s racing to define the rules of trusted AI. Through GDPR, the Digital Services Act, and the EU AI Act, the European Union is reshaping the conditions under which AI systems can be deployed, trusted, and scaled in regulated markets.

This is not regulation for its own sake. It is a calculated effort to influence and catalyze sovereign AI development by making compliance-by-design a prerequisite for market access. For businesses, the message is increasingly clear: align early with Europe’s governance-first direction, or risk exclusion from the markets that will define the future of trustworthy technology.

Introduction: A Different Approach to AI Leadership

They called it overreach.

They called it innovation-killing red tape.

They were wrong, but not because Europe is trying to outbuild Silicon Valley or outscale China.

While much of the world debated AI ethics in theory, the European Union was operationalizing trust through law. Europe is not claiming to have “solved” AI governance. What it has done is more subtle and arguably more powerful: it is defining the conditions under which AI can legally operate in healthcare, finance, government, and critical infrastructure.

This distinction matters. Control over AI systems does not come from data location alone. It comes from governance, jurisdiction, and enforceable oversight.

This is not leadership by model size or compute. It is leadership by market access.

Key Takeaways

- EU regulation is an industrial policy.

The EU AI Act, GDPR, and the Digital Services Act (DSA) form a deliberate regulatory stack that reshapes market rules and procurement conditions, influencing how sovereign AI systems are designed, deployed, and governed, not to restrict innovation, but to channel it toward trusted, compliant use cases.

- Compliance is now a competitive advantage.

In regulated sectors such as healthcare, finance, and critical infrastructure, being AI Act-ready is becoming a core procurement requirement, and a durable market moat.

- Trust is the new product differentiator.

Companies that build AI systems aligned with GDPR privacy-by-design and DSA transparency requirements from day one are best positioned to win long-term adoption.

- The Brussels Effect is beginning to influence.

Europe’s approach to AI regulation is increasingly shaping global standards, making early alignment with EU rules a strategic advantage beyond the European market.

The Ghost of Missed Revolutions

Europe carries the memory of missed technological revolutions. The continent that invented the web watched Silicon Valley dominate the internet economy and China master large-scale digital platforms. Its digital sovereignty eroded while foreign platforms captured value.

When AI emerged as the next epoch-defining technology, Europe faced a familiar choice: depend again, or shape the terms.

This time, Europe is pairing governance with capital.

Across the region, governments and private actors are investing in large-scale AI infrastructure, including national compute initiatives, gigawatt-scale data center capacity, and public–private partnerships designed for regulated workloads. The emergence of European AI champions such as Mistral reflects a parallel effort to ensure foundational AI capabilities are developed within Europe’s legal and governance framework.

These investments do not eliminate Europe’s structural challenges overnight. But they signal intent: regulation is being matched with infrastructure, not used as a substitute for it.

The Regulatory Stack: Europe's Digital Fortress

Europe’s response appears as three boring legal texts: GDPR, the Digital Services Act, and the AI Act. Viewed separately, they’re regulations. Viewed together, they form a deliberate architectural blueprint for sovereign AI, a “Regulatory Stack” designed not to limit, but to liberate European innovation.

Layer 1: The Data Moat (GDPR)

Like a moat, GDPR doesn’t stop innovation; it controls access.

By making privacy-by-design legally mandatory, Europe reshaped the starting conditions for AI development. Companies building in Europe learned to treat data governance as a foundational requirement, not an afterthought. Foreign platforms, by contrast, were forced into costly retrofits and compliance workarounds.

This becomes especially relevant when considering extraterritorial laws such as the U.S. CLOUD Act, which can override physical data location and expose European data to foreign jurisdiction.

Layer 2: The Transparency Mandate (Digital Services Act)

By targeting “Very Large Online Platforms”, a category dominated by global incumbents, the DSA limits the strategic advantage of opaque, black-box algorithms. Algorithmic systems that shape information, commerce, and public discourse are now subject to transparency, accountability, and oversight requirements.

This sunlight does more than regulate behavior. It weakens scale-driven opacity and creates space for auditable, explainable alternatives to compete.

Layer 3: The Arena Construction (AI Act)

This is where Europe’s strategy becomes explicit. The EU did not shut the gates on AI; it built the arena and defined who is allowed to compete inside it.

Through its risk-based framework, the AI Act classifies AI systems used in healthcare, finance, and critical infrastructure as high-risk, allowing only systems that meet strict governance, documentation, and oversight requirements to operate. In these markets, compliance is no longer optional, it is the price of entry.

The Strategy Behind Europe’s AI Advantage

Seen together, Europe’s approach reveals a clear logic:

Problem: Limited access to hyperscale capital and unconstrained data.

Insight: Power lies in defining operating conditions, not winning on speed.

Strategy: Use the single market to make governance a competitive moat.

Execution: Force compliance into procurement, not afterthoughts.

Outcome: In regulated sectors, governance-ready AI systems are structurally better positioned to compete.

This shift is already visible. While consumer AI dominates headlines, European AI companies are increasingly becoming trusted suppliers to hospitals, banks, and governments, environments where governance matters more than benchmark gains.

When Trust Becomes the Product

AI is entering its trust phase. The early excitement around generative capability is giving way to deployment in environments where errors, opacity, and loss of control carry real consequences.

Consider two vendors pitching a European hospital:

Vendor A: “Our model is 5% more accurate.”

Vendor B: “Our system meets EU AI Act high-risk requirements, with audit trails, explainability, and GDPR-compliant data handling.”

In Europe’s regulated markets, Vendor B doesn’t just win, Vendor A often cannot compete at all.

This is the regulatory moat in action.

From Europe’s Rules to Europe-Ready AI

For Open Innovation AI, Europe’s governance-first approach is not a future compliance hurdle, it is the definitive blueprint for the infrastructure that will power trusted enterprise AI. We recognize that successful market entry hinges on meeting specific, nuanced regulatory demands. Our platform is architected from the ground up to turn these requirements into core capabilities.

We address Europe’s key regulatory asks directly:

- AI Supply Chain Transparency & SBOMs: A core ask of the AI Act is to demystify the AI supply chain. Open Innovation AI enables comprehensive software Bill of Materials (SBOM) generation for every AI workload, providing auditable documentation of model provenance, training data lineage, and all software dependencies. This isn’t just a report; it’s built-in traceability that satisfies mandatory transparency obligations for high-risk systems.

- Sovereign Control Plane Management: Regulations demand that organizations, not their vendors, maintain ultimate control. OIAI’s platform, OICM, provides a granular, policy-driven control plane that governs the entire AI lifecycle: data, models, compute, and inference. This ensures deployments can enforce strict data residency rules and maintain operational sovereignty.

- Infrastructure for Sensitive & Air-Gapped Workloads: For sectors like healthcare, defense, and finance, the requirement for air-gapped or highly restricted environments is paramount. Open Innovation AI is designed to deploy and manage AI workloads in these isolated configurations, ensuring sensitive data and models never traverse unauthorized networks, meeting the highest bar for data protection and operational security.

By embedding these governance features at the infrastructure layer, Open Innovation AI transforms compliance from a complex, post-development retrofit into a native operational state. Organizations adopting our platform don’t just prepare for European market entry, they build a durable, trust-based competitive advantage that aligns with the most stringent global standards emerging tomorrow.

Conclusion: The New Axis of AI Power

Europe’s lesson is counter-intuitive but clear: in AI, regulatory power is technological power.

While others focus on scale, Europe is shaping the environment in which AI can be trusted, adopted, and sustained. The next decade of AI competition will not be decided by models alone, but by governance, control, and societal legitimacy.

Europe is defining those boundaries. Companies that align early will help shape what comes next.