OICM is a powerful AI cluster management platform designed for enterprises to orchestrate, scale, and optimize AI workloads securely and efficiently.

Built-in multi-tenancy, RBAC, and governance tools

Supports any GPU provider without vendor lock in

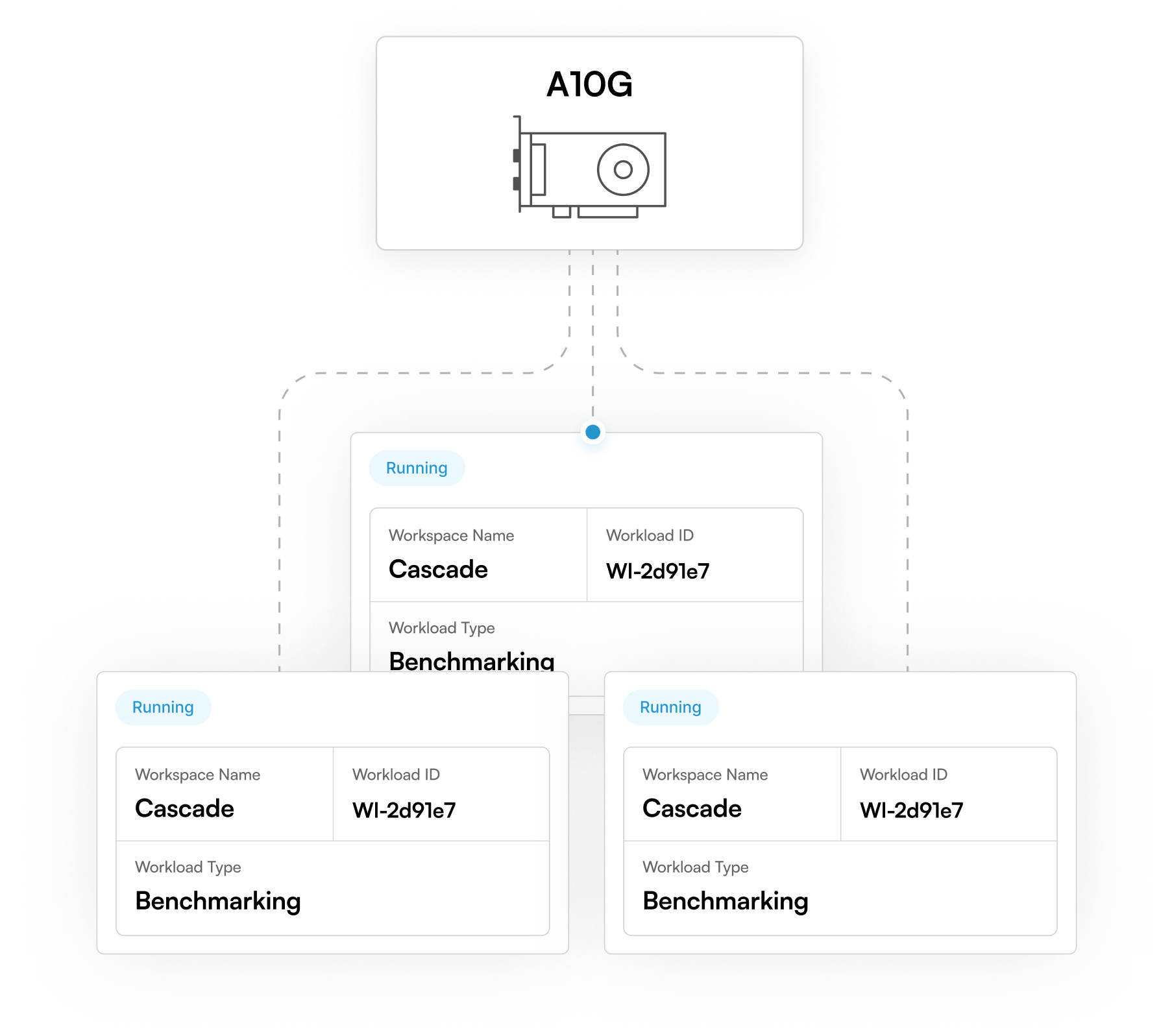

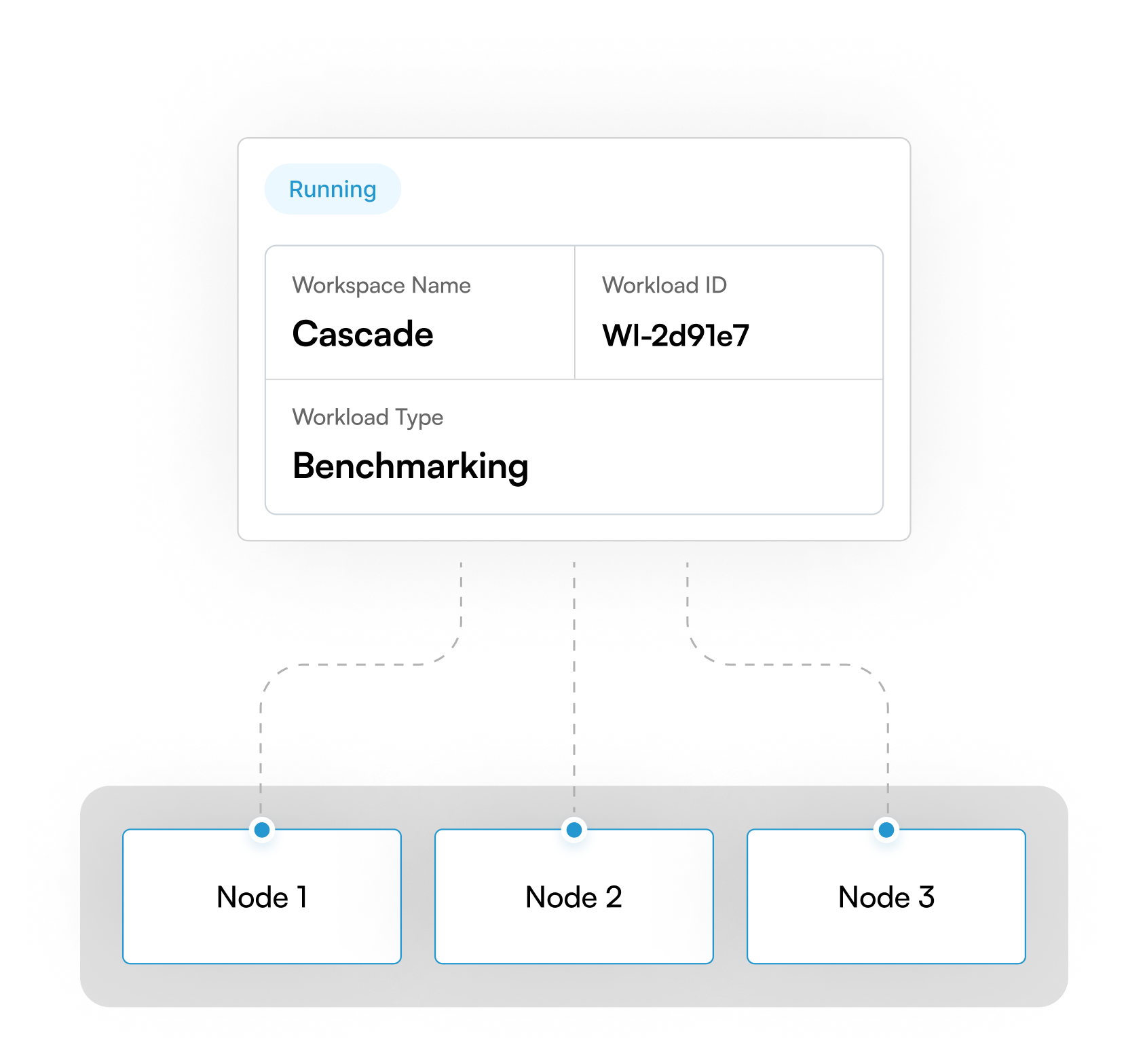

Intelligent workload scheduling and resource management

Orchestrate AI workloads across clusters with full control.

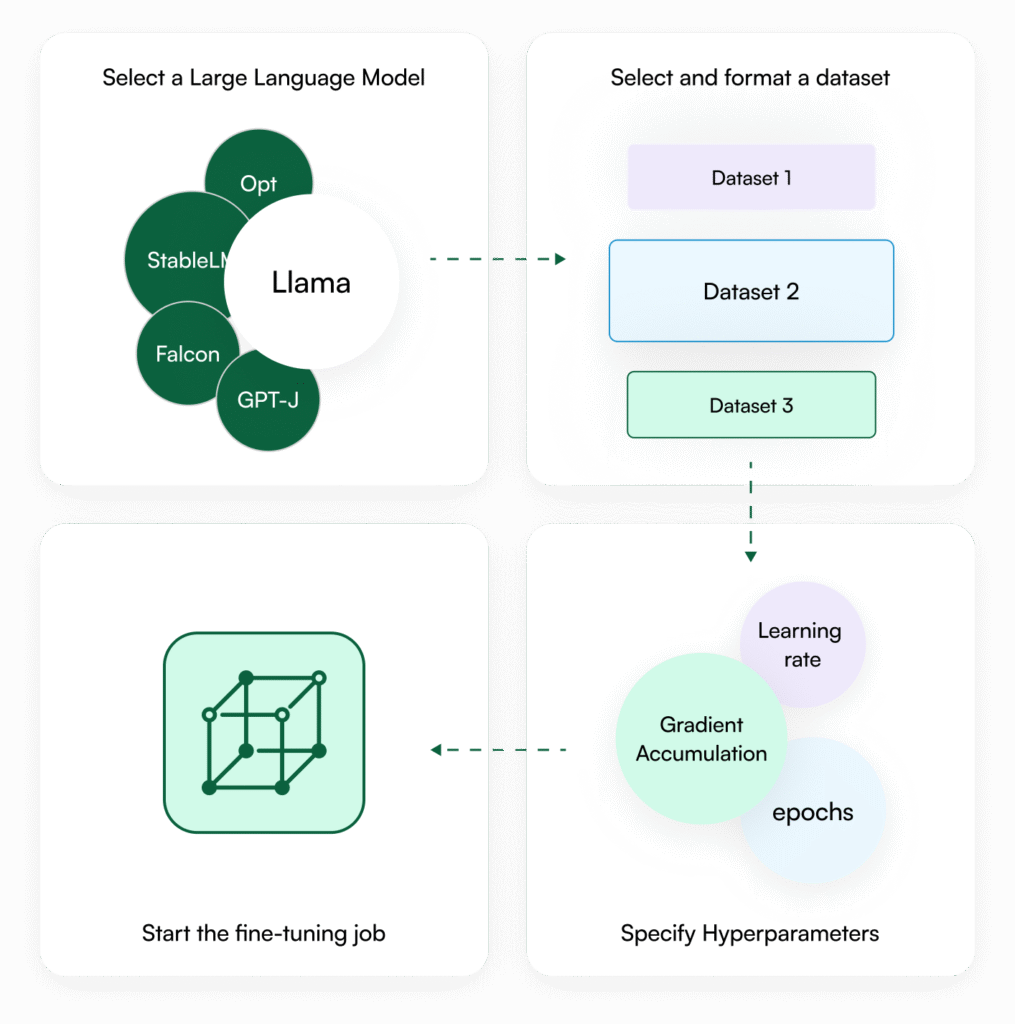

From provisioning to production-ready LLM workflows, in hours, not weeks.

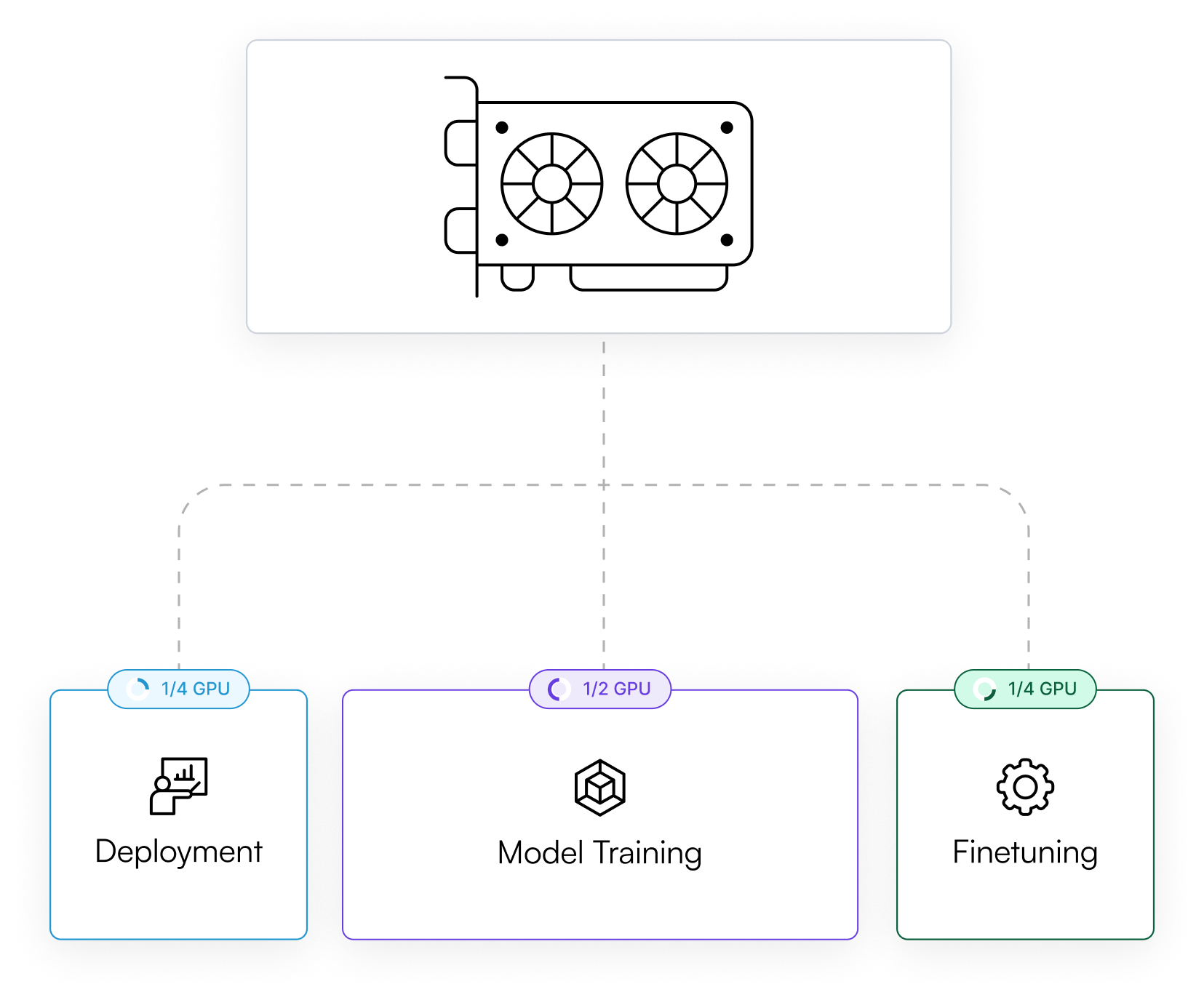

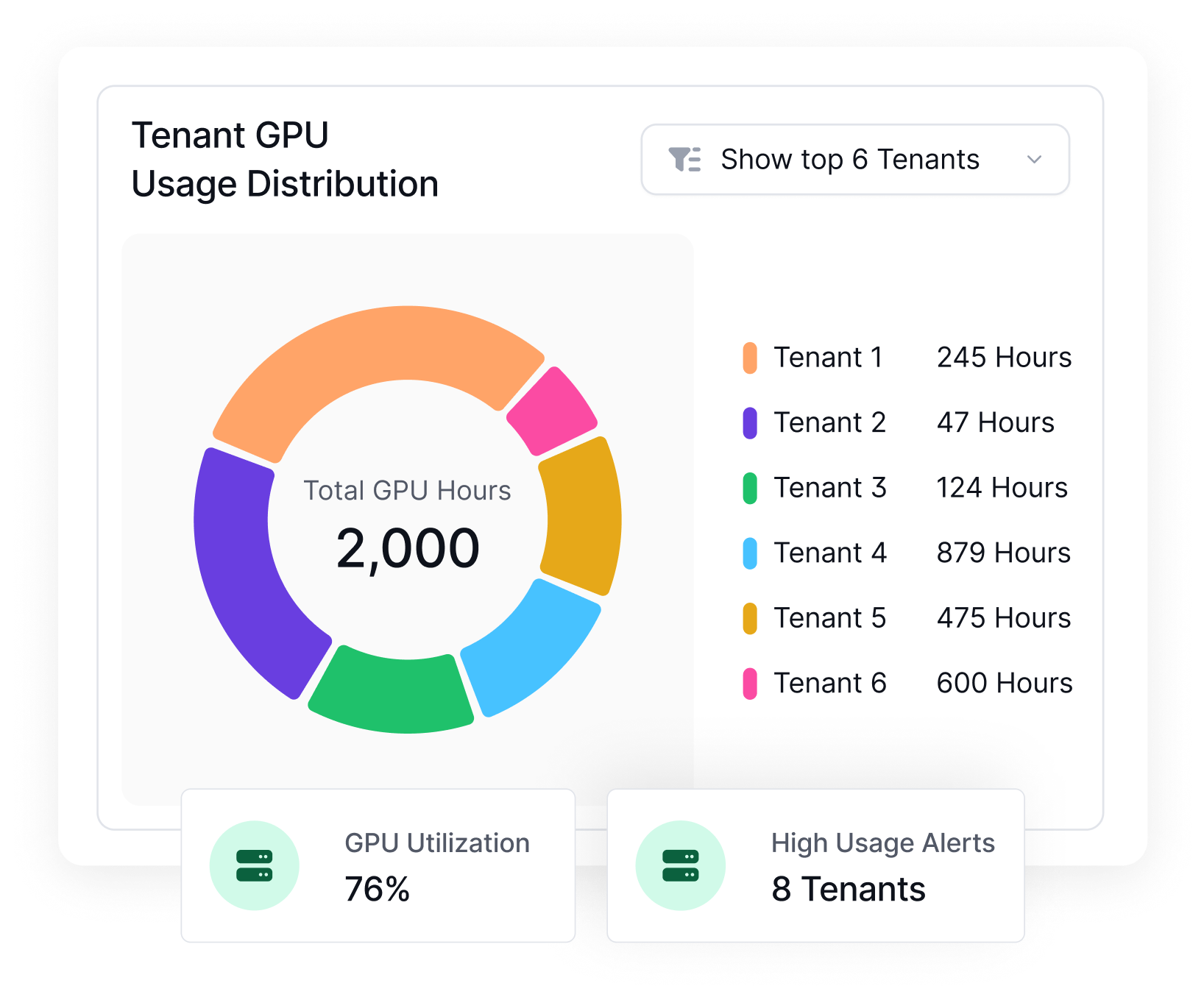

Maximize usage with multi-tenancy, job-aware scheduling, and GPU fractioning.

Optimize for per-second GPU billing and auto-scaling with zero idle capacity.

Deploy on your own cloud, region, or bare-metal servers, no lock-in.

A secure, flexible platform to orchestrate GPU infrastructure across multi-tenant, multi-cluster, and multi-cloud environments

CEO and founder

SVP Partnership, WWT

Regional VP, PureStorage

Chief Researcher, TII

CEO, Nscale

Yes, OICM supports fractional GPU allocation, enabling you to divide a single GPU into smaller portions (e.g., 1/2, 1/4) and assign them to different jobs to optimize GPU utilization.

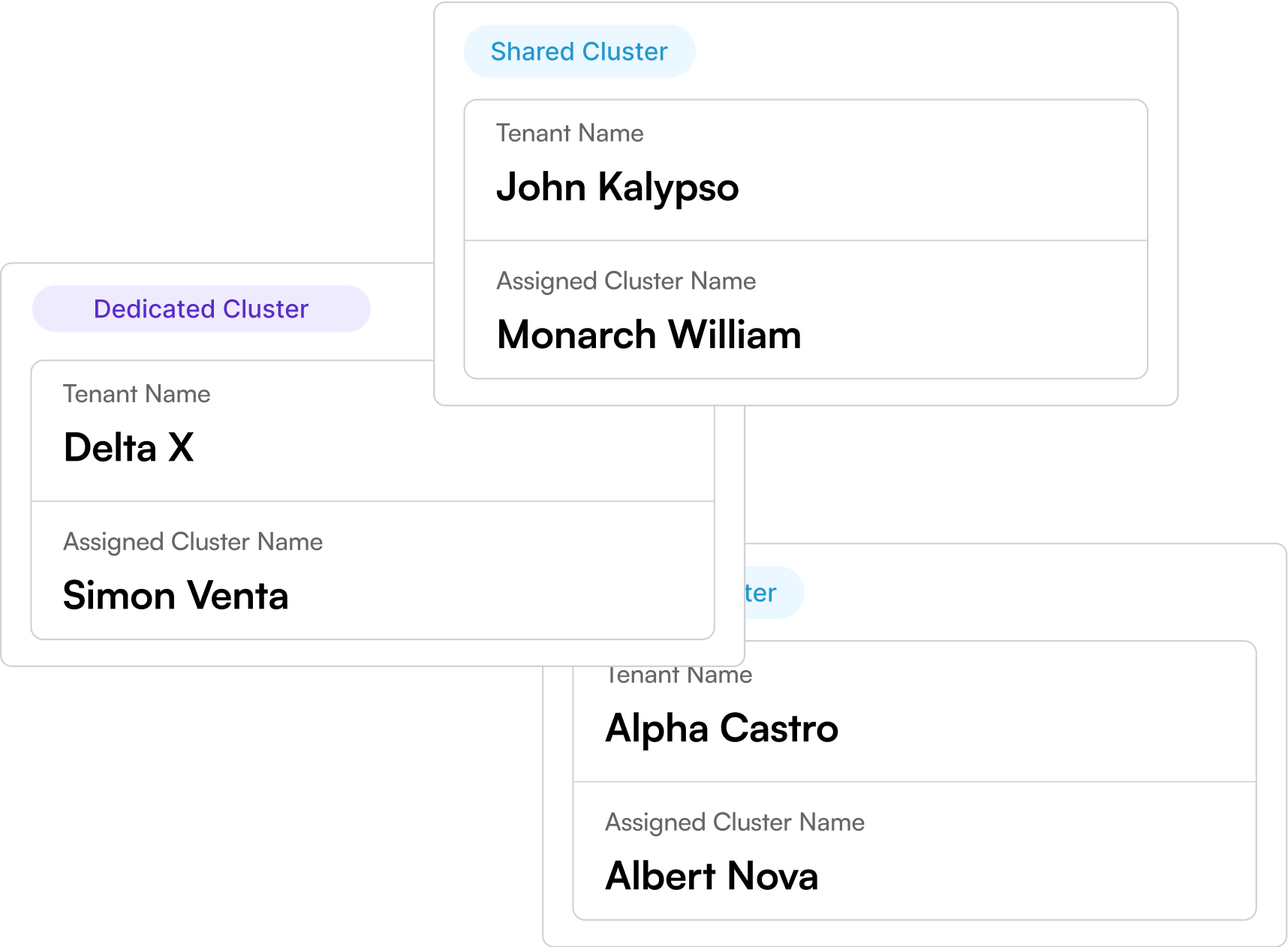

Each tenant operates in an isolated environment with dedicated namespaces or clusters, compute quotas, and role-based access control for secure resource segregation.

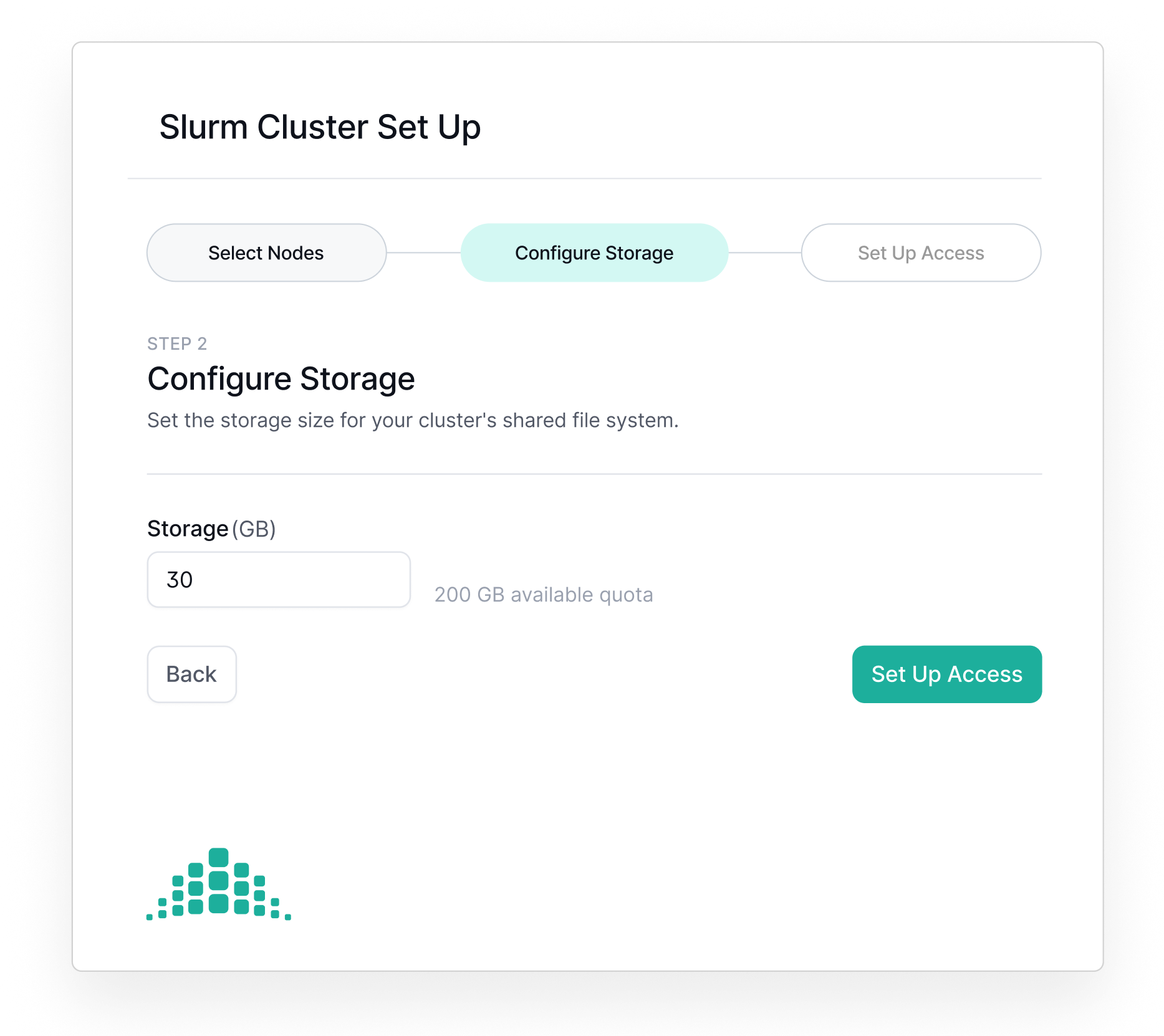

Yes, OICM includes native integration with both SLURM and Kubernetes, enabling hybrid orchestration across batch and containerized workloads.

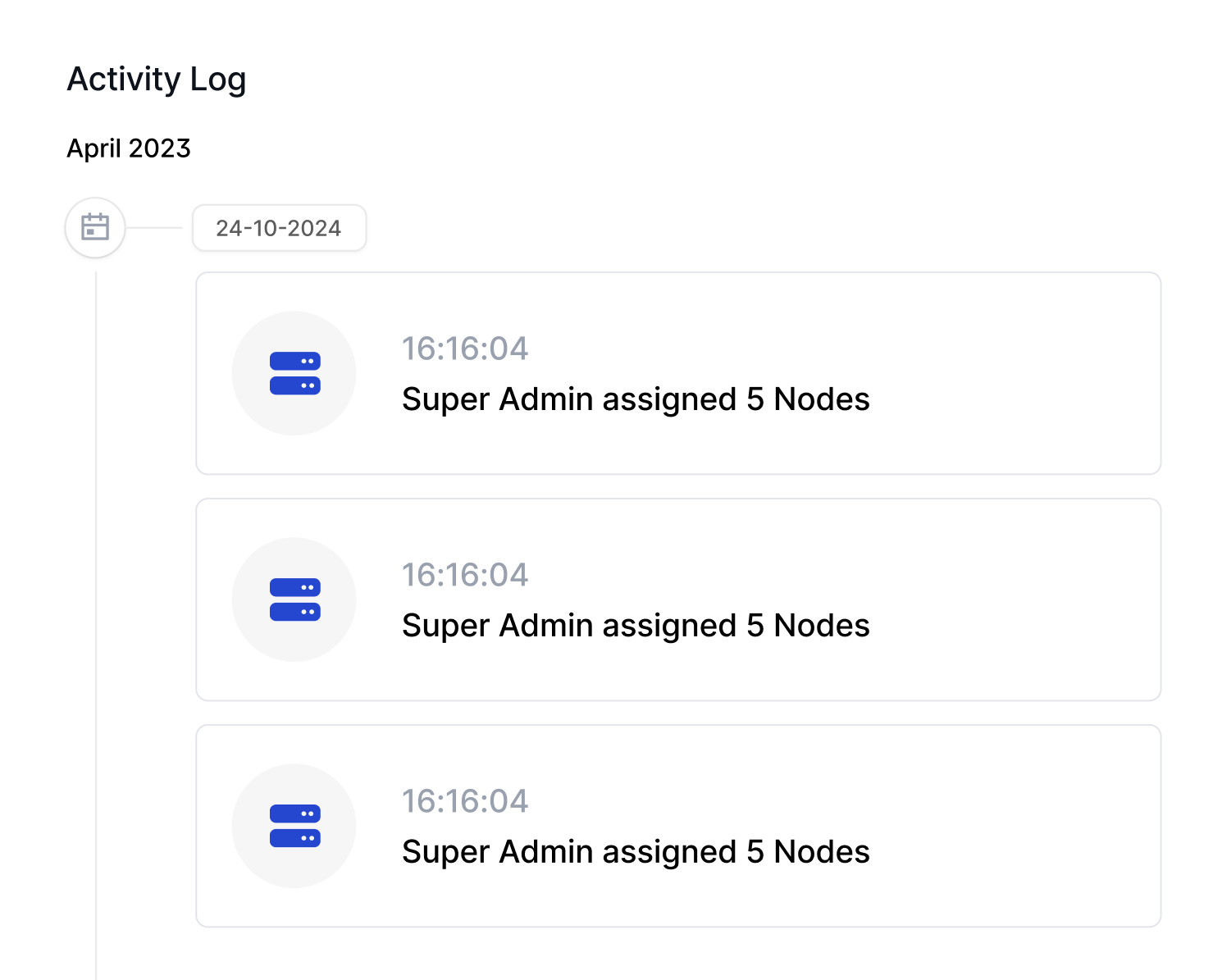

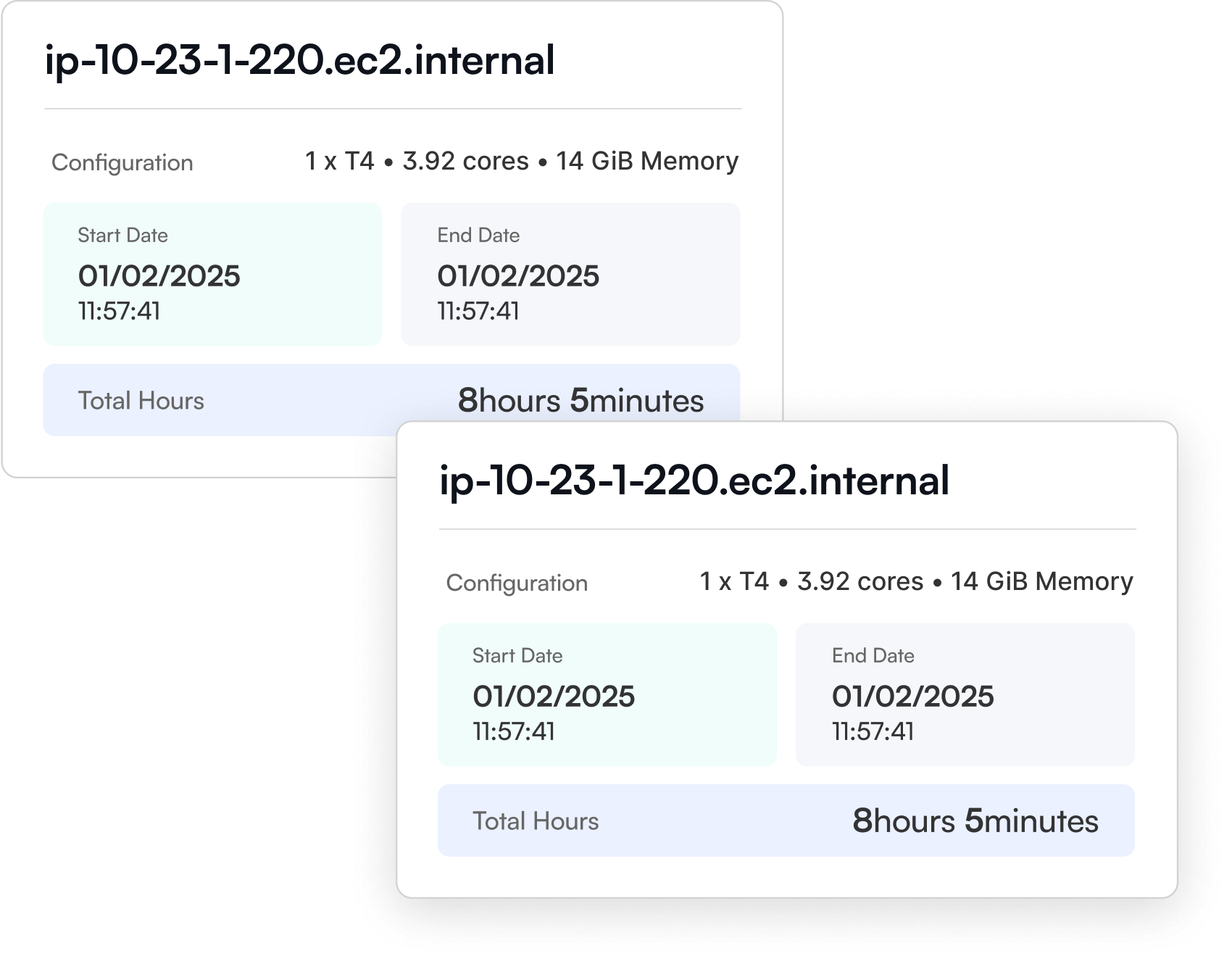

Absolutely. You can track usage metrics (CPU, GPU, memory) per workspace, or tenant, along with billing and quota visibility.

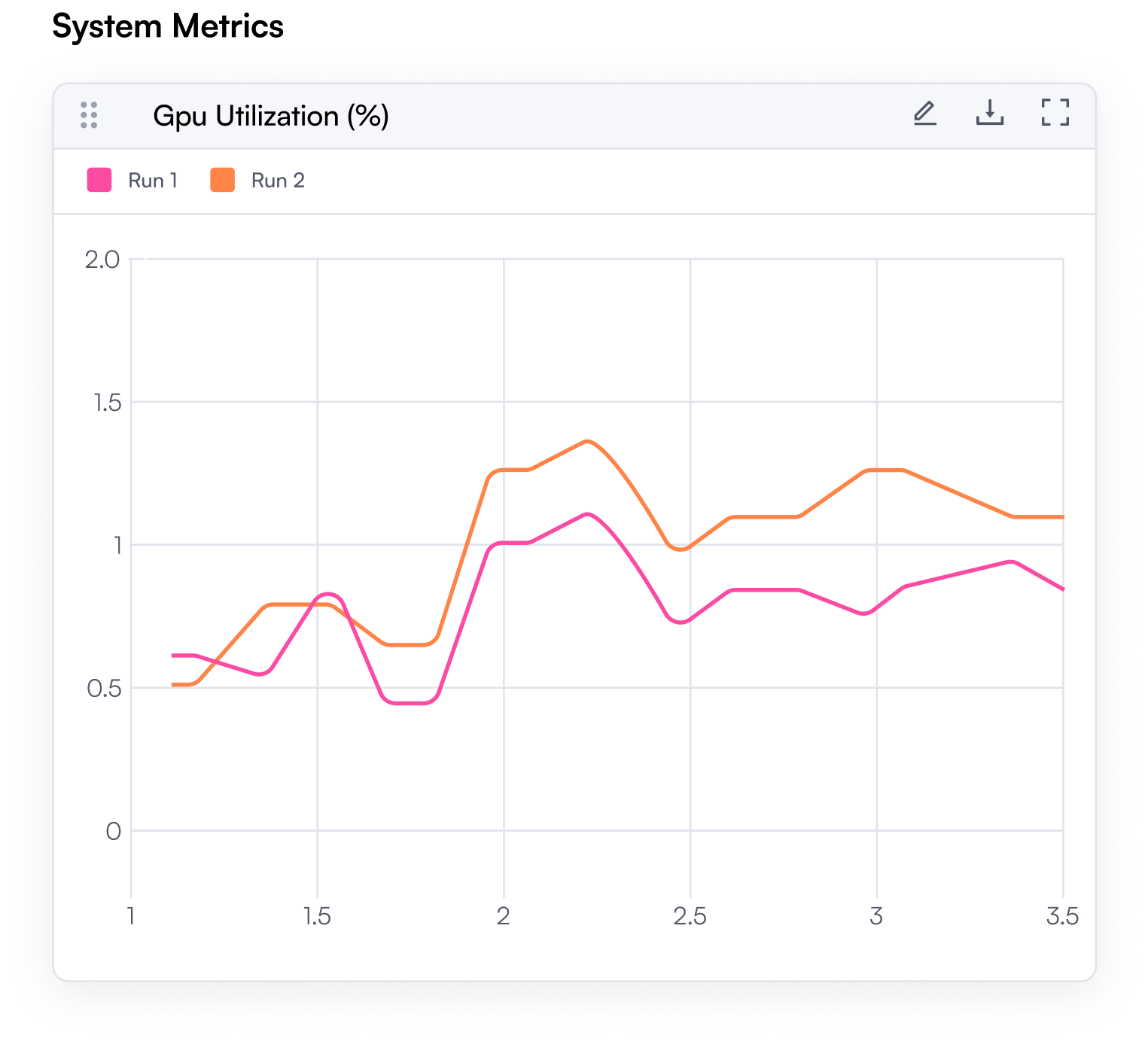

The monitoring layer provides real-time dashboards, logs, and alerting for all infrastructure components, including nodes, GPUs, agents, and jobs.

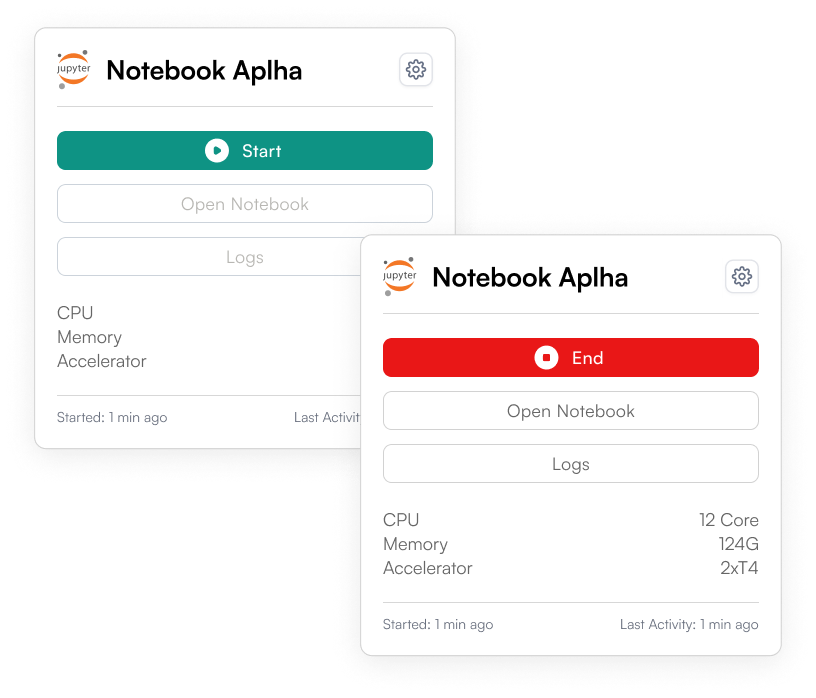

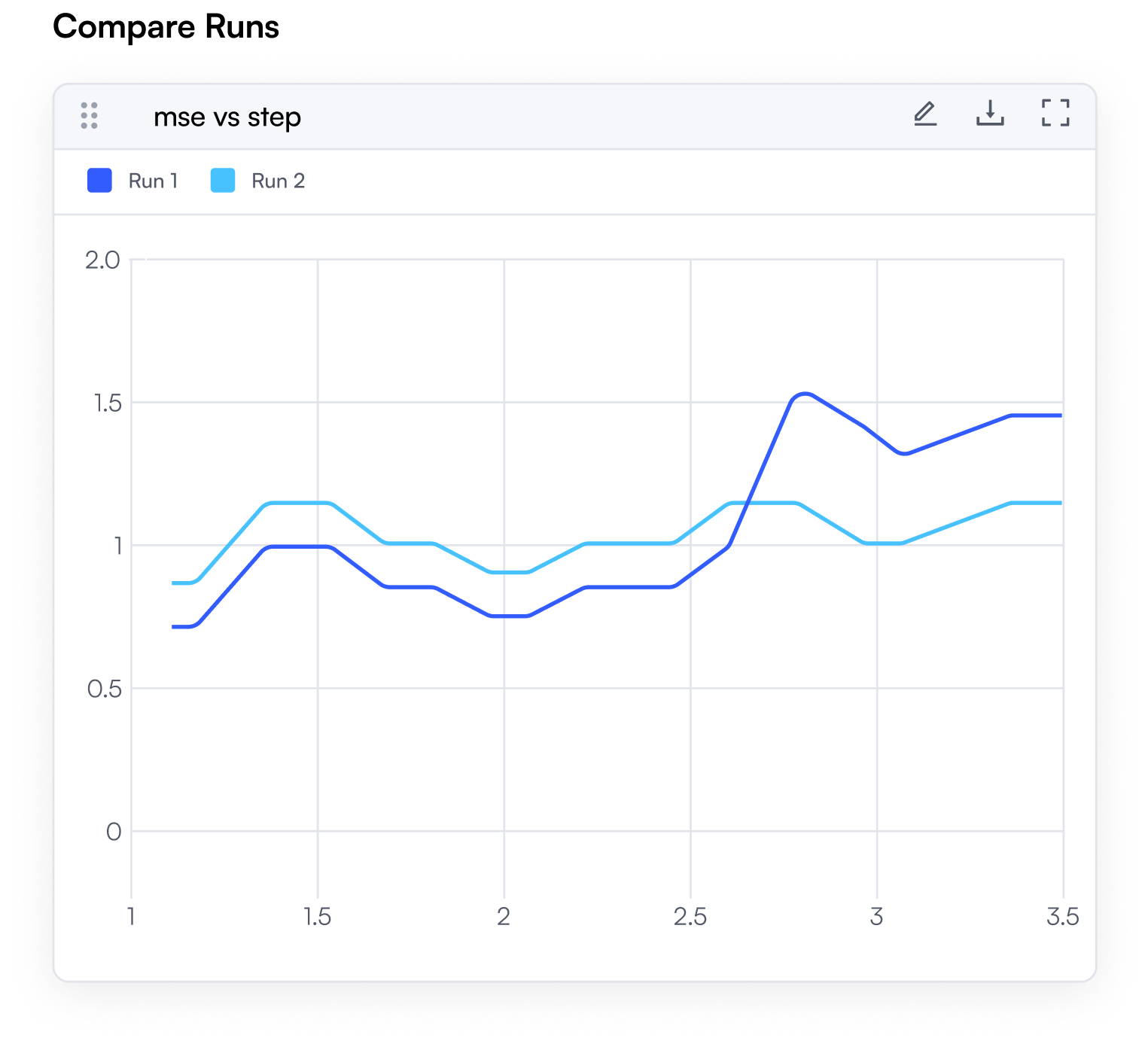

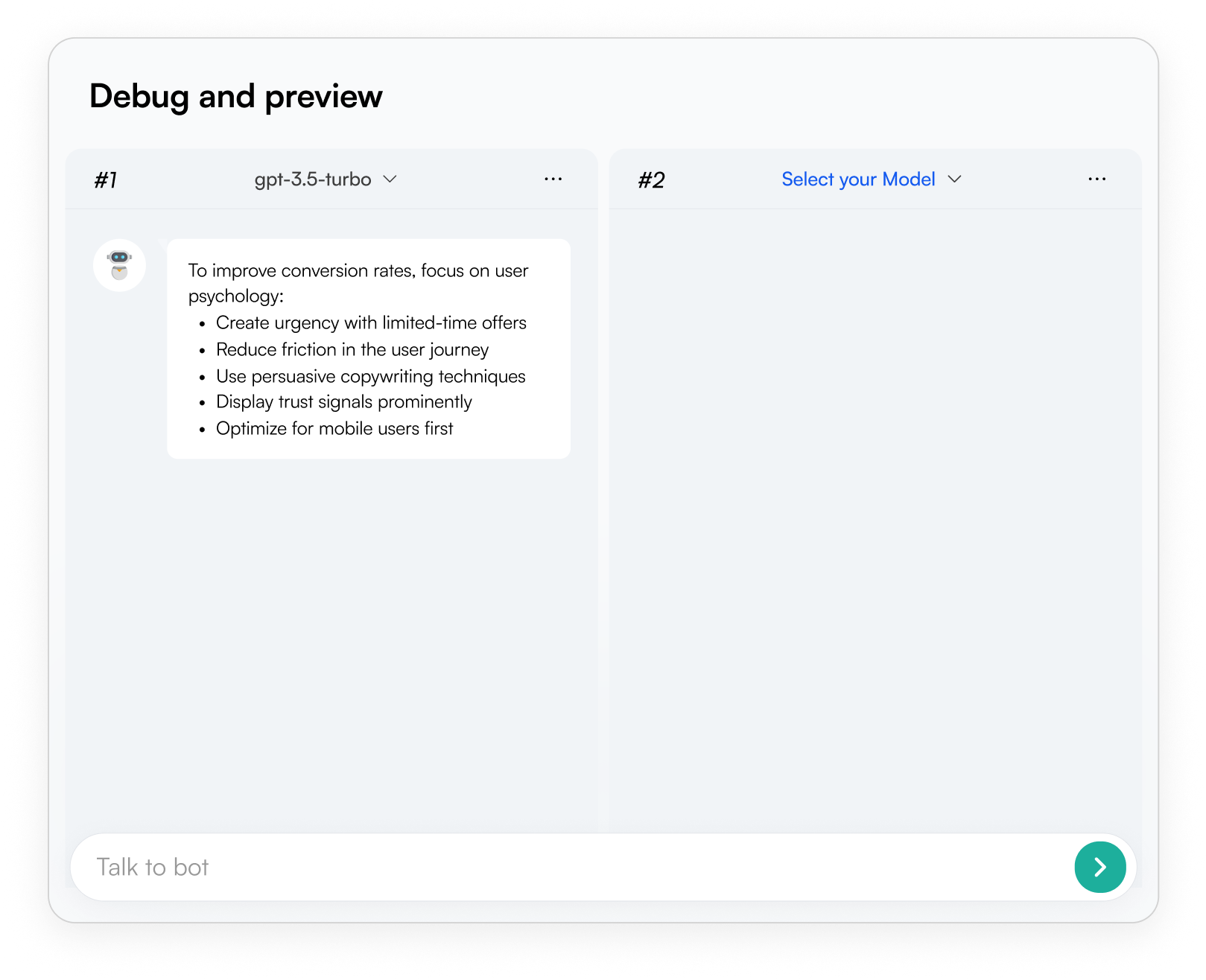

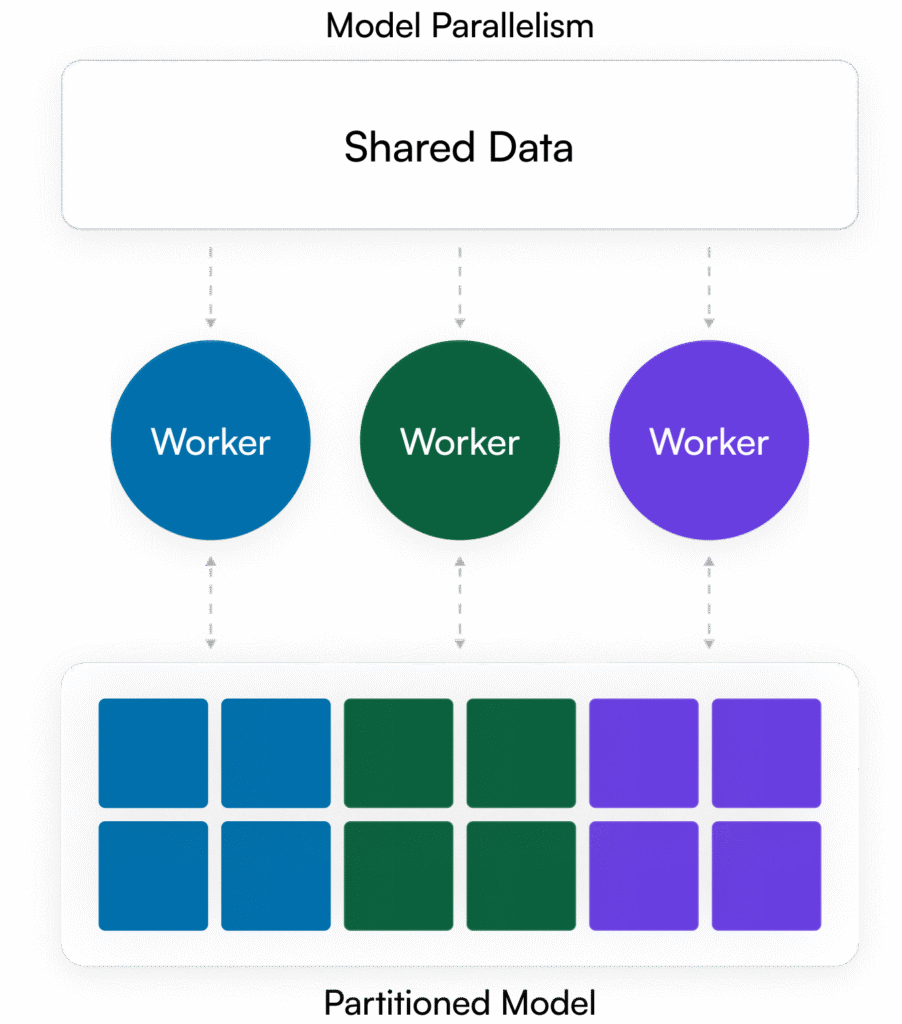

OICM supports the entire AI and machine learning lifecycle, from experimentation to production. You can use it to deploy, train and fine-tune models, run distributed training jobs and more. Once your models are ready, OICM helps you deploy them to production, conduct A/B testing between different versions, and benchmark their performance. It also provides a secure and GPU-optimized inference environment, along with managed Jupyter notebooks for data exploration and experimentation.

For more details, check out all of our features in the OICM documentation.

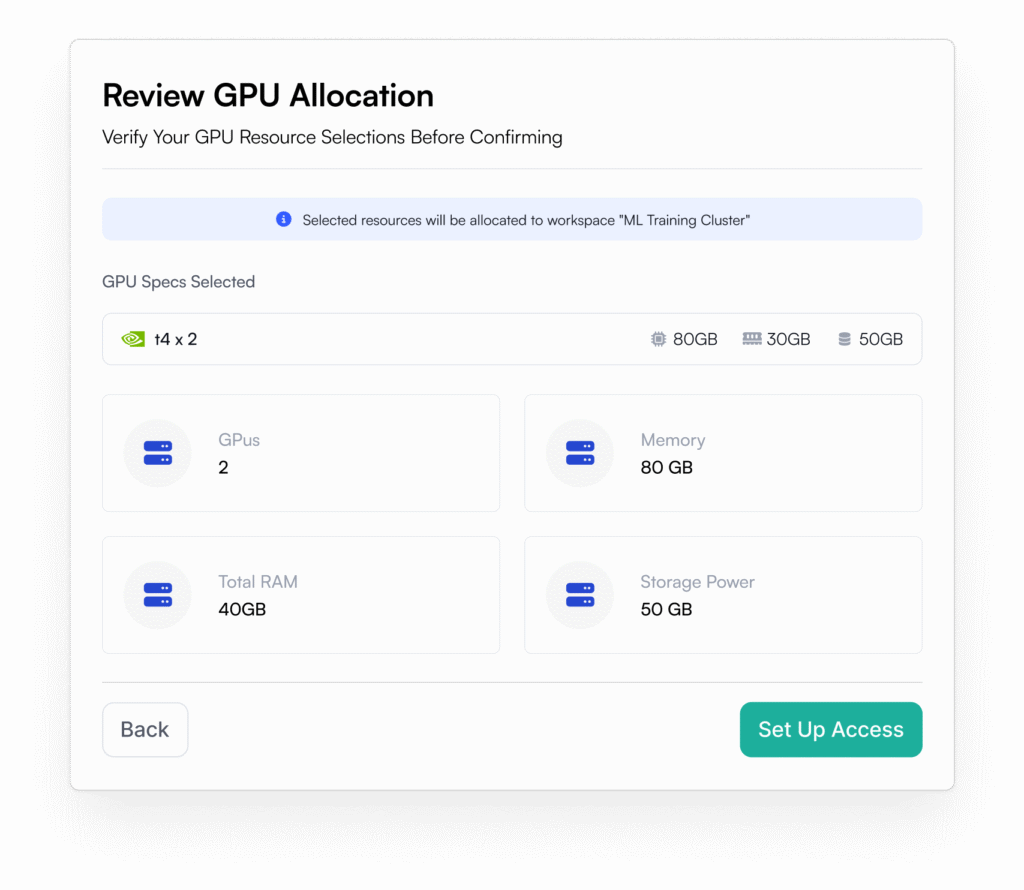

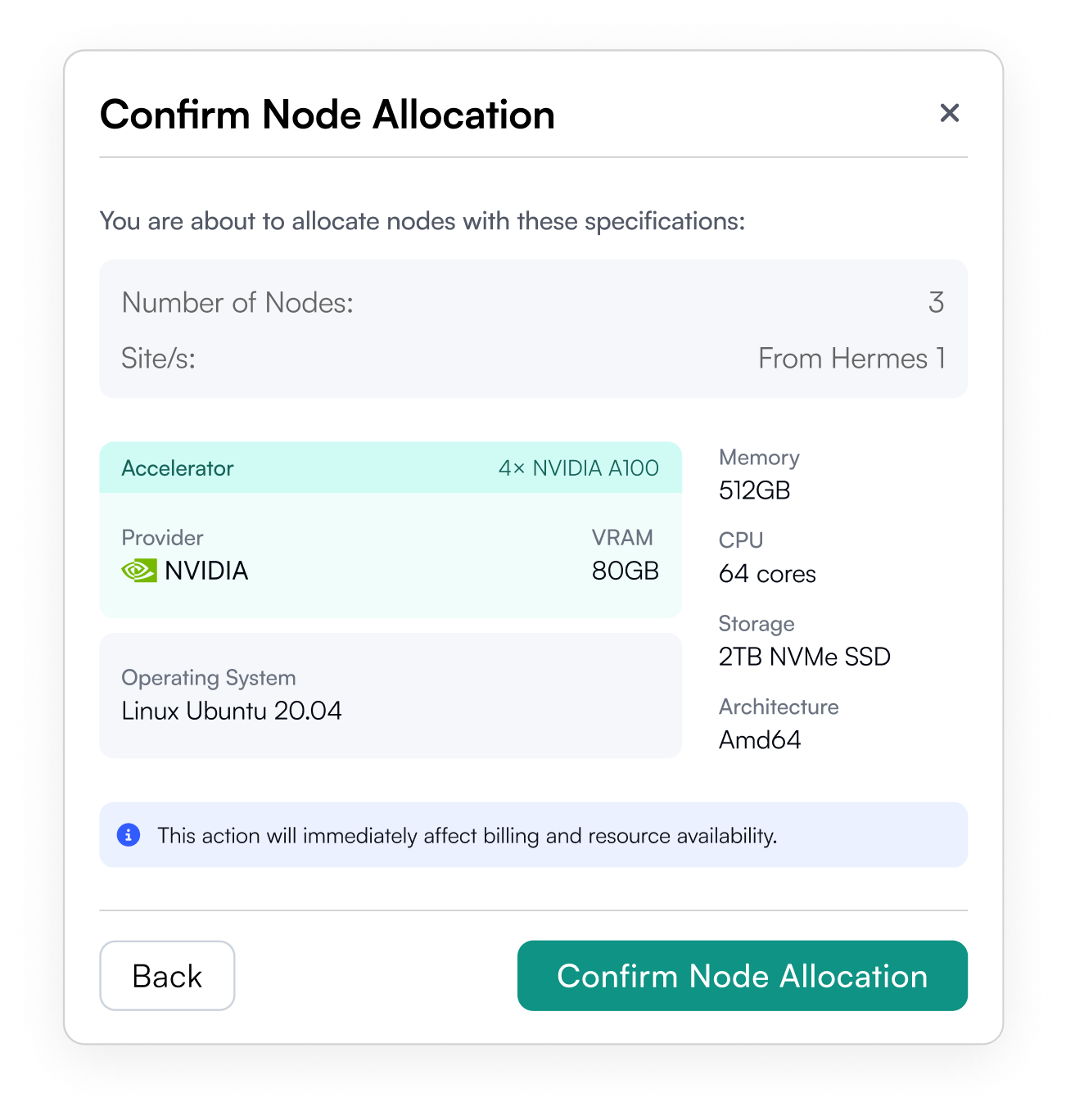

To enable GPU access for your team, your platform administrator first needs to onboard your Kubernetes cluster to OICM. Once onboarded, platform administrators can manage access and allocate GPU resources to specific teams or projects through the OICM portal. This ensures that GPU usage is controlled, secure, and aligned with your team’s needs. OICM gives you full visibility and control over how infrastructure resources are allocated and shared across your organization.

For step-by-step guidance, visit the OICM documentation