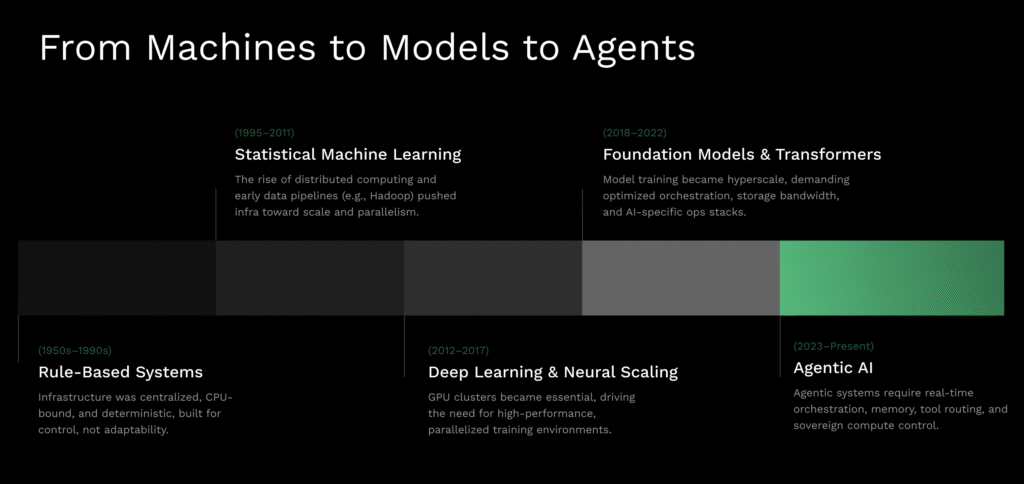

Enterprises are moving from single models to agentic systems that plan, act, and learn across many applications. That shift only works at scale when you keep control of data, infrastructure, and operations.

This article explains what sovereignty means in practice, how a sovereign agent stack fits together, and how to go from pilot to production without losing control.

Open Innovation appears where it is most useful, as the orchestration engine and control plane that helps connect these pieces.

Why sovereignty matters when you move from models to agents

Most teams already use large models for classification, summarization, or search. Agents raise the stakes. An agent does not just answer a prompt. It plans a sequence of steps, calls tools and APIs, stores context for later, and adapts to feedback. That new behavior increases value, but it also increases the surface area for risk. Now you must decide where data lives, how tools are authorized, where workloads run, who can audit what happened, and how to roll back when behavior drifts.

This is where sovereignty becomes more than a slogan. Sovereignty means you keep sustainable independence across the AI lifecycle. Data stays within the right borders and classifications. Workloads run where policy allows. Models and their versions are under your control. Operators inside your organization can understand, measure, and improve the system without external gatekeepers.

The sovereign agent stack, as one system

Think of the stack as five layers that cooperate, not five boxes on a slide.

Governance and control

This is the guardrail layer. Role based access, audit trails, policy checks, drift detection, and incident investigation live here. When an agent tries to use a tool, this layer confirms it is allowed. When behavior changes, this layer raises the alert and provides the evidence.Agent runtime

The runtime maintains the working memory, the plan, and the loop that takes observations, chooses actions, and evaluates results. It also handles fallbacks. If a tool is down, the runtime tries a backup path and records the decision.Tool and router

Every action passes through a gate that validates schemas, adds authentication, and applies rate limits and circuit breakers. This keeps your systems safe from malformed requests and helps you reason about cost and performance.Model store

Models, prompts, adapters, and evaluation data need versioning and lineage. The store supports canary releases, staged rollouts, and clean rollback. In regulated environments this is essential for audit and for continuous improvement.Orchestration and compute

Training, fine tuning, and inference require placement, scaling, and isolation across on prem, private cloud, public cloud, and sometimes air gapped environments. Orchestration enforces data locality, applies GPU quotas, and decides when to burst or to keep jobs inside a boundary.

Open Innovation fits across these layers as the engine that coordinates policy aware placement, hybrid orchestration, and runtime control. In other words, it helps your governance decisions and agent decisions show up as concrete scheduling, scaling, and logging outcomes in real clusters.

How Sovereign Agents Work in the Real World

Let’s connect the architecture to a real-world flow.

Imagine a public sector team launching a multilingual document assistant. The agent ingests records, answers citizen questions, and initiates back-office actions. Some documents are public, others are restricted by law. Certain tasks can run in the public cloud, while others must remain on state infrastructure. The team wants faster response times and lower costs, but only if they can show full traceability.

This sets the stage for sovereignty in action. Governance proves who did what and when. The runtime plans actions safely. The tool router checks permissions before accessing a system of record. The model store versions everything, allowing quick rollback if needed. Orchestration places each job in the right cluster, with data locality enforced by policy.

The same design applies beyond government.

In financial services, a bank deploys a compliance analysis agent that reviews transactions and drafts investigator notes. Public data enrichment runs in a cloud region, but any request containing personal identifiers stays in-country. The orchestrator enforces this separation while the agent runtime preserves context securely. Canary releases in the model store allow safe upgrades without disrupting operations.

In healthcare, a provider pilots an intake assistant that extracts structured data from referrals. The agent reads PDFs, validates the data through a secure tool, and writes results into an EHR system. GPU quotas prevent this workload from starving clinical decision support during morning peaks. Canary strategies enable new vision models to be rolled out clinic by clinic.

Across these contexts, Open Innovation acts as the sovereignty-aware control point connecting governance rules to real orchestration decisions, ensuring agents operate with autonomy while respecting compliance, locality, and resource boundaries.

From principle to practice: the four pillars, with actions

It is easy to list pillars. It is more useful to map each pillar to a few actions you can take next quarter.

Data sovereignty

• Classify data by sensitivity and residency.

• Set routing rules that block restricted classes from leaving specific regions.

• Use storage locations and VPC boundaries that match the classification.

Talent and operations sovereignty

• Build internal runbooks for agent incidents, model rollbacks, and tool outages.

• Upskill existing cloud and DevOps teams on agent runtimes and model operations.

• Require knowledge transfer for any partner build so your team can own day two.

Infrastructure sovereignty

• Decide the default placement for critical workloads and the exceptions that can burst.

• Diversify hardware and clouds to reduce single vendor risk.

• Use an orchestration stack that is agnostic and policy driven, not tied to one provider.

IP and algorithmic sovereignty

• Prefer open models or models you can adapt and host.

• Track prompt, adapter, and dataset lineage so you can prove ownership and comply with reuse terms.

• Build a lightweight evaluation harness to compare new models before they reach production.

Open Innovation supports these actions by providing a unified control plane for multi cluster, multi cloud scheduling, by enforcing placement and quota policies, and by exposing the logs and metrics that compliance teams expect.

What changes when agents hit production

In pilots, teams focus on accuracy and latency. In production, new concerns appear.

Capacity and placement

You will need GPU aware scheduling that avoids starvation and noisy neighbor effects. Bin packing and quotas help you use resources fully without hurting priority workloads. If your traffic is bursty, autoscaling must be tuned for warm pools and cold start impact.

Data locality and privacy

Requests that include restricted data must stay inside the right boundary. Your orchestrator needs to understand those rules at scheduling time, not as an afterthought.

Reliability and rollback

Agents will drift. Tool contracts will change. Some prompts will underperform in new contexts. You need a roll forward and rollback plan that does not interrupt service. Canary and A or B strategies help you prove a change is safe before global rollout.

Observability and accountability

Leaders will ask why the agent approved a claim or triggered a workflow. You need step level logs that connect inputs, tool calls, outputs, and policy checks. This is not just for audits. It is the raw material for improvement.

Open Innovation addresses these concerns by combining policy aware scheduling with canary controls, GPU quotas, and a single view of agent runs and tool calls across clusters. That is what turns governance rules into running systems.

A phased path from pilot to scale

Week 0 to 2: baseline and guardrails

Define data classifications, workload tiers, and default placement. Turn on basic audit and RBAC. Choose one agent use case that delivers clear value without touching the most sensitive systems.

Week 3 to 6: pilot with controls

Connect two or three tools behind the router. Enable evaluation and basic dashboards. Add quotas for GPU and memory so other workloads stay healthy.

Week 7 to 10: resilience and rollout

Introduce canary releases for models and prompts. Add incident playbooks for tool failures and behavior drift. Expand to a second cluster or a second region with data locality rules enforced by the scheduler.

Week 11 to 12: production readiness

Harden monitoring, alerts, and budgets. Document ownership. Review audit samples with security and compliance teams. Plan the next two agent use cases using the same playbook.

Open Innovation supports each step with hybrid orchestration, sovereignty aware placement, and controls that your platform team can own.

Closing thought

Agentic AI can raise productivity and service quality. The organizations that will benefit most are the ones that keep control while they scale. Treat sovereignty as a design principle, build the stack to match it, and use a control plane that makes policy and practice meet.

If you want help planning the first pilot or connecting your existing tools to a sovereignty aware orchestrator, we can map it with you in a short working session.