When we built OI Code, our mission was to redefine how enterprises adopt AI-powered development tools without compromising sovereignty or security. The goal was clear: deliver a fully self-hosted, GPU-accelerated coding assistant that operates entirely inside an organization’s infrastructure, even in air-gapped environments.

OI Code enables developers to use modern AI coding features: chat, agentic automation, and intelligent in-line code completions, all within their own environment.

No external APIs. No data exposure. Complete control.

Why Enterprises Need a Sovereign AI Coding Platform

As software engineering teams accelerate digital transformation, many organizations encounter the same barrier: AI copilots that depend on public APIs or internet access are incompatible with regulated or classified systems. Sectors like government, finance, and defense cannot risk sending source code or intellectual property outside their network perimeter.

Typical challenges include:

- Data Sovereignty: Source code must remain within the local infrastructure.

- Compliance & Security: Network isolation and strict audit requirements.

- Limited Internet Connectivity: No external API calls are allowed.

OI Code was designed to solve all of these by bringing the full AI-powered development experience inside the enterprise network.

Why OI Code

OI Code bridges AI productivity and security.

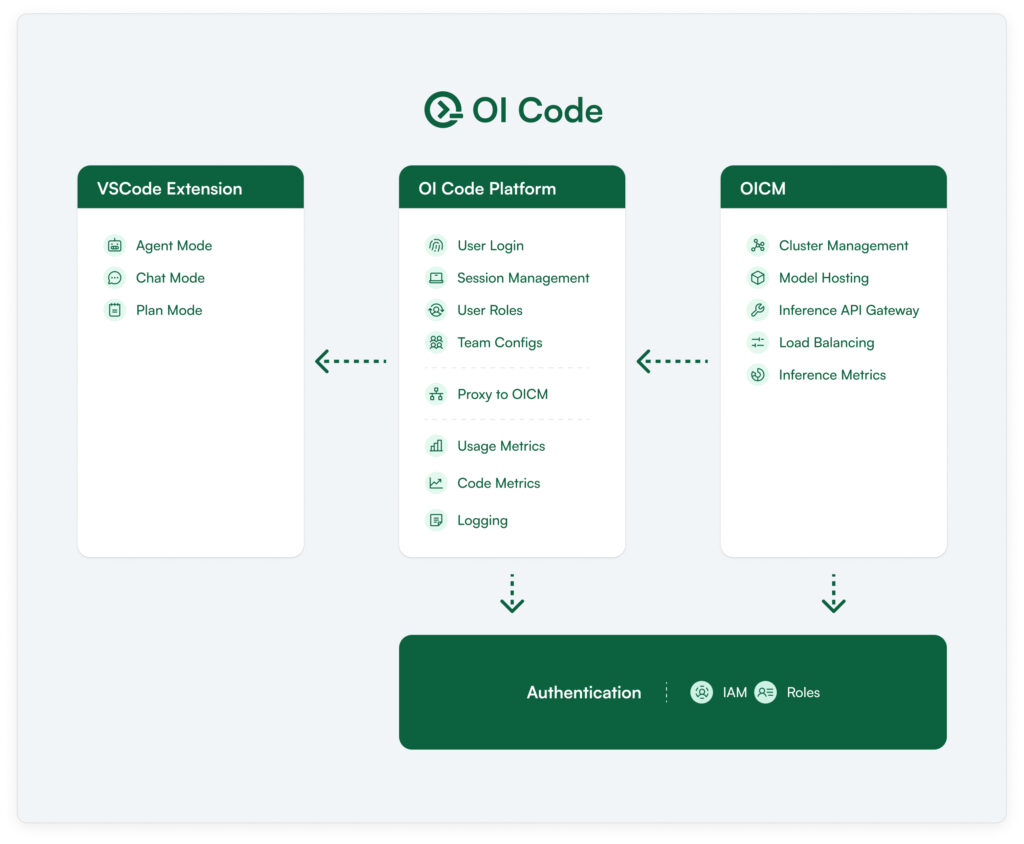

It offers a VS Code-integrated developer assistant that connects only to your internal OI Code Platform, which in turn is powered by Open Innovation Cluster Manager (OICM), your in-house orchestration layer for models and GPUs.

In short:

- manages and serves your models.

- OI Code Platform acts as a secure proxy with RBAC, usage analytics, and rate limits.

- OI Code Extension connects developers’ IDEs to the platform through your internal endpoint.

All inference happens locally, accelerated by your own GPU infrastructure.

No part of the code or prompt ever leaves your environment.

How OI Code Works (End-to-End Flow)

1- Deployment

Models are registered and hosted on internal GPU servers using. Each model exposes a local OpenAI-compatible /chat/completions endpoint.

2- OI Code Platform Setup

The OI Code backend is deployed within the same network (or cluster). It handles:

- Authentication and role-based access control (RBAC)

- Proxy routing to internal models

- Token usage tracking and quotas

- Configuration and policy management

3- Team & Configuration Management

Admins create Configs that group models (e.g., “gpt-oss-120b”, “deepseek-coder-33b”) and assign them to Teams.

Teams automatically inherit access and rate limits.

4- Developer Access (Offline)

Developers install the OI Code VS Code Extension (distributed via .vsix package) directly from the portal, no internet required.

They connect using a session-based internal URL, e.g.,:

https://oi-code.company.internal/api/auth/login?client=vscode&session_id=abc123

5- GPU-Accelerated Inference

All requests from the IDE route through the internal OI Code proxy to where inference runs on local GPUs. The output is returned instantly inside VS Code.

Core Capabilities

- Agent, Plan, and Chat Modes

Developers can switch between modes for exploration, safe planning, or code-modifying automation.

- RBAC & Governance

Admins define user roles and team-level configurations, controlling which models can be accessed.

- Usage Dashboards

Real-time visibility into token consumption, model load, and user activity.

- Offline Operation

Fully operational in air-gapped clusters, no internet dependency for extensions or models.

- Model Flexibility

Supports any OpenAI-compatible endpoint, including proprietary, open-source, or fine-tuned internal models.

- Compliance-Ready

All logs and interactions remain within the local cluster, aligned with enterprise audit and security requirements.

Technical Architecture Simplified

OICM Layer:

Handles GPU scheduling, containerized model deployment, and endpoint registry.

OI Code Platform:

Central governance and proxy layer providing authentication, logging, rate limiting, and per-team configuration.

VS Code Extension:

Developer interface enabling AI chat, code completion, agentic automation, and refactoring, all locally routed.

Each layer runs inside the organization’s network, whether private cloud, on-prem, or fully air-gapped.

Strategic Advantages

Capability | Impact |

Sovereign by Design | All data stays within your infrastructure, perfect for government and regulated sectors. |

GPU-Accelerated | Local inference ensures high performance without internet latency. |

Governance & Control | Track, audit, and limit model usage across teams. |

Modular & Scalable | Works across hundreds of developers and multiple model types. |

Air-Gap Friendly | Fully functional offline, no calls to public APIs or clouds. |

Conclusion

OI Code redefines AI-powered development for secure enterprises.

It combines sovereignty, performance, and developer productivity into one platform, ready for air-gapped environments and governed through .

With OI Code, your developers code faster and your IP stays where it belongs.